In his recent article, Phil Boyer at Crosslink (disclosure: a Leapfin investor and Board Member) highlighted several key investment themes for the year 2023. One of the themes he discussed is the importance of investing in data, data pipelines, and data platforms, AKA “the data assembly line!”

One of his key takeaways is the need to question assumptions and think critically about how data can be used to create competitive advantage and to take a first principles approach.

Of course it’s hard to step back and think about first principles in the midst of massive data sprawl and exponential data growth.

Let me offer a framework that might help.

Most companies fall into one of two fundamental data challenges (and often, into both) that prevent them from taking full advantage of their data: breadth & depth data problems.

Let’s start with breadth.

Folks have invested heavily in tech and with it the SaaS landscape has exploded, APIs are everywhere, emitting data from a slew of disparate sources and structures.

- ERP

- OMS

- CRM

- PSP

- APS

- BI

- EDW

- HRIS

…and that’s just the tech stack. On top of that, there are all the other data sources, like your bank and any internally developed software that’s emitting financially relevant data (basically all of those sources…).

Breadth can be very challenging but in part depends on the novelty of your data acquisition sources (Data Platforms and integration platforms as a service have helped systems folks simplify how data from many sources is governed and re-integrated elsewhere!).

Let’s imagine, for example, that you need to understand net profit per order. To engineer that into your data pipeline you need to write code to make a call to your ERP API that extracts data with a REST connector/authentication/validations API to get all the data in a JSON payload and load it into a central data store.

But you also need to tie variable commission expenses to your orders to get to net profit, and that data doesn’t live in your ERP but in your HRIS/Compensation platform. Unfortunately that API requires us to send SOAP requests and now we’re engineering a completely different auth process and then have to serialize the XML into JSON.

That’s just a rudimentary example using only data formats from common APIs and doesn’t touch data structures, transformations, etc.

Then there’s depth

Depth is arguably the harder problem to solve because it lacks a constraint in the ol’ scale department.

For small datasets, it’s not uncommon to build pipelines using in-memory processes (like building Pandas dataframes in Python and loading them to a data warehouse like Snowflake).

But as your datasets grow, you will hit a point where your infrastructure can’t handle the load (either by taking hours to run DAG pipelines or you just run out of memory entirely) and you need to move to a distributed process. Don’t even get me started on how to capture changed data on massive data sets, if you didn’t think/know about this up front!

Now if you’re processing data in your ERP + Excel, data is growing non linearly and hitting API/Concurrent/Contract limits, or going beyond the capabilities of Excel row limits for a monthly close, isn’t at all uncommon.

Exponential growth will hit you in the face much faster than you might expect. Can your ERP handle it? Sure, they’ll take your money. I had a project a few years back to cut over an archive data stream from a well known SaaS ERP, and in switching over to a data platform we saved over $30k per month, almost half the contract size, and it only took one internal engineer and one financial systems analyst less than a month.

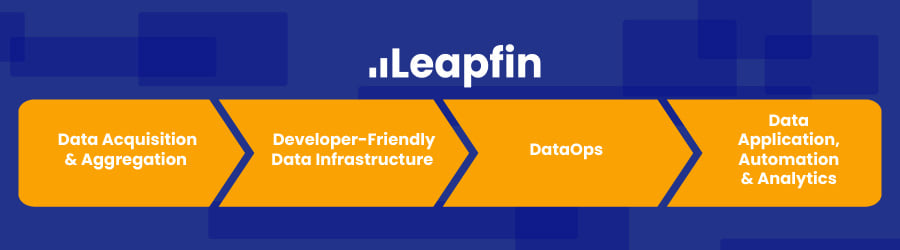

While the solution to breadth vs depth challenges are different, the underlying capabilities required to solve both are the same: deep data and software engineering expertise and crisp and unambiguous system requirements. Or, a platform built with the same deep data and software engineering expertise, built by expert engineers and product thinkers to stay ahead of the voracious appetite of business data.

And that’s just how you solve the problem you knew about yesterday.

Conclusion

Boyer’s article serves as a reminder that data is a critical driver of business value and that it is important for folks to take a first principles approach when investing in data, data pipelines, and data platforms. By doing so, you can ensure that your business stays competitive and is well-positioned to take advantage of the opportunities that lie ahead.

Alternatively, you can allow your data to continue to grow in breadth and depth and rely more and more on assumptions and broad averages to run a business (or better yet make your ERP rep wealthy off data computation & storage arbitrage) while the rest of the world invests in data to feed their predictive analytics and AI.

Jeff Bezos is shaking in his weight room at the thought of running a business without the highest fidelity business metrics. But AWS will take its cut either way. The question is, how well will you run your business?

See how Leapfin works

Get a feel for the ease and power of Leapfin with our interactive demo.